🔍 Data Ethics: Essential Concepts for Reading Comprehension

Data ethics explores the principles and standards governing the collection, storage, analysis, and use of data. It ensures that technological advancements respect individual rights, promote fairness, and minimize harm. RC passages on this topic often delve into issues like privacy, bias, and accountability in data-driven systems. Understanding these concepts enables readers to critically evaluate ethical challenges in a data-centric world.

📋 Overview

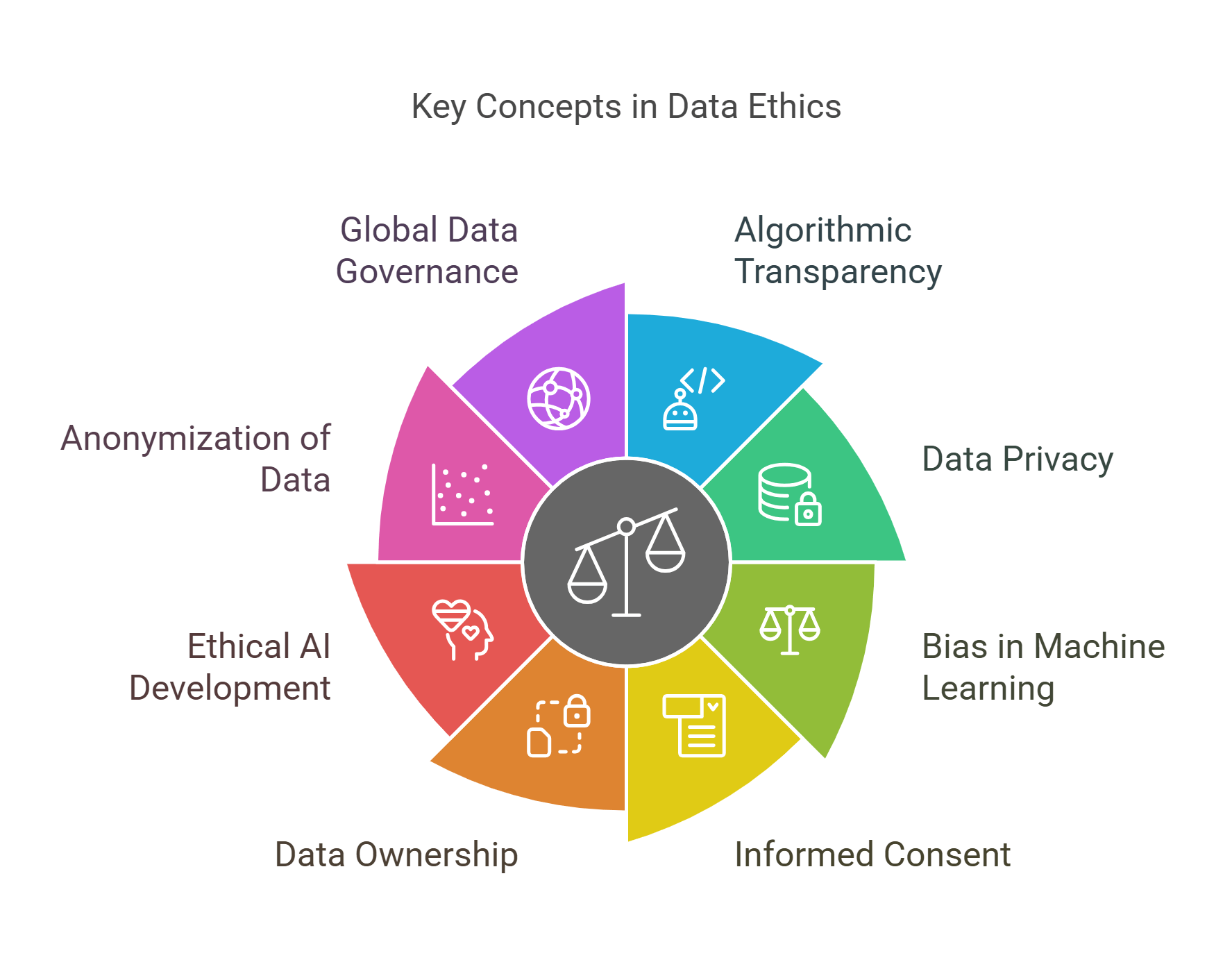

This guide explores the following essential concepts in data ethics:

- Algorithmic Transparency

- Data Privacy

- Bias in Machine Learning

- Informed Consent

- Data Ownership

- Ethical AI Development

- Anonymization of Data

- Global Data Governance

- Responsible Data Usage

- Surveillance Ethics

🔍 Detailed Explanations

1. Algorithmic Transparency

Algorithmic transparency refers to the ability to understand, evaluate, and explain how algorithms make decisions. This concept is critical for ensuring fairness and accountability in automated systems.

- Key Aspects:

- Explainability: Algorithms should provide clear, comprehensible reasoning behind their outputs.

- Auditability: Systems should be open to external reviews to detect flaws or biases.

- Accountability: Developers and organizations must take responsibility for algorithmic decisions.

- Challenges:

- Complex machine learning models (e.g., neural networks) are often opaque, making it difficult to interpret their decisions.

- Trade-offs between transparency and intellectual property protection.

Example: Algorithmic transparency is essential in credit scoring systems to explain why certain individuals are approved or denied loans.

Explained Simply: Algorithmic transparency is like turning on the lights in a factory—you can see how every part of the process works.

2. Data Privacy

Data privacy refers to the rights and measures that protect individuals’ personal information from unauthorized access, misuse, or disclosure. It ensures that people maintain control over how their data is collected, stored, and shared.

- Core Principles:

- Consent: Data should only be collected with the explicit permission of the individual.

- Minimalism: Only the data necessary for a specific purpose should be collected.

- Security: Adequate measures should be taken to protect data from breaches.

Legal Frameworks:

- GDPR (General Data Protection Regulation): A European Union regulation ensuring data protection and privacy rights.

- CCPA (California Consumer Privacy Act): Provides similar protections for California residents.

Example: Social media platforms must disclose how they use personal data and allow users to control their privacy settings.

Explained Simply: Data privacy is like locking your diary—it ensures that only you decide who reads it.

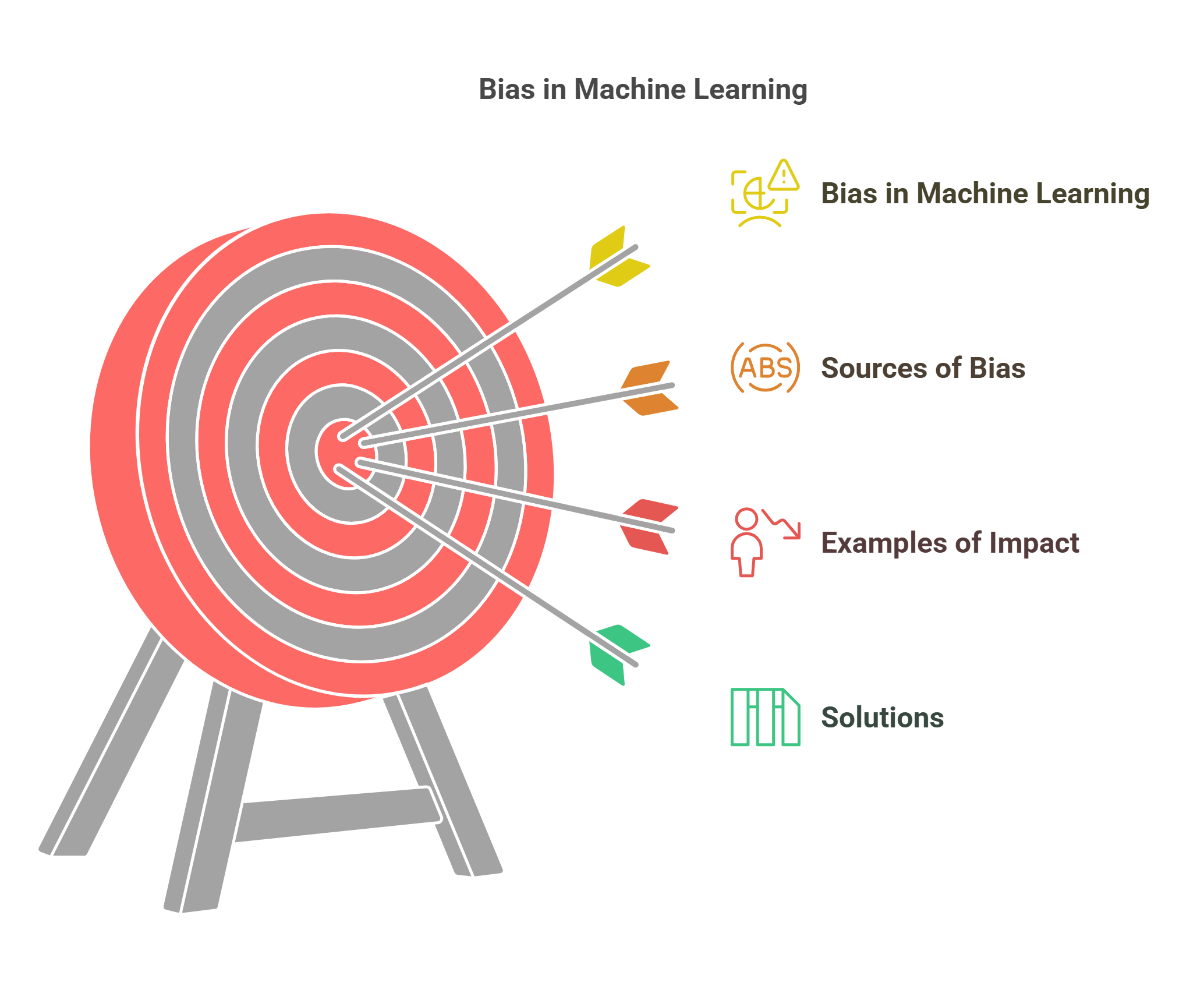

3. Bias in Machine Learning

Bias in machine learning arises when algorithms produce unfair or discriminatory outcomes due to biased training data, flawed design, or systemic inequalities. This bias can perpetuate or amplify existing societal prejudices.

- Sources of Bias:

- Data Bias: Training datasets that underrepresent or misrepresent certain groups.

- Algorithmic Bias: Flaws in the design or assumptions of the algorithm.

- Feedback Loops: Systems reinforcing biases over time (e.g., predictive policing).

Examples of Impact:

- Facial recognition systems misidentifying individuals based on race or gender.

- Biased hiring algorithms favoring certain demographics over others.

Solutions:

- Regular audits to identify and mitigate bias.

- Diversifying datasets and development teams.

Explained Simply: Bias in machine learning is like teaching a robot with one-sided stories—it learns to see the world in a skewed way.

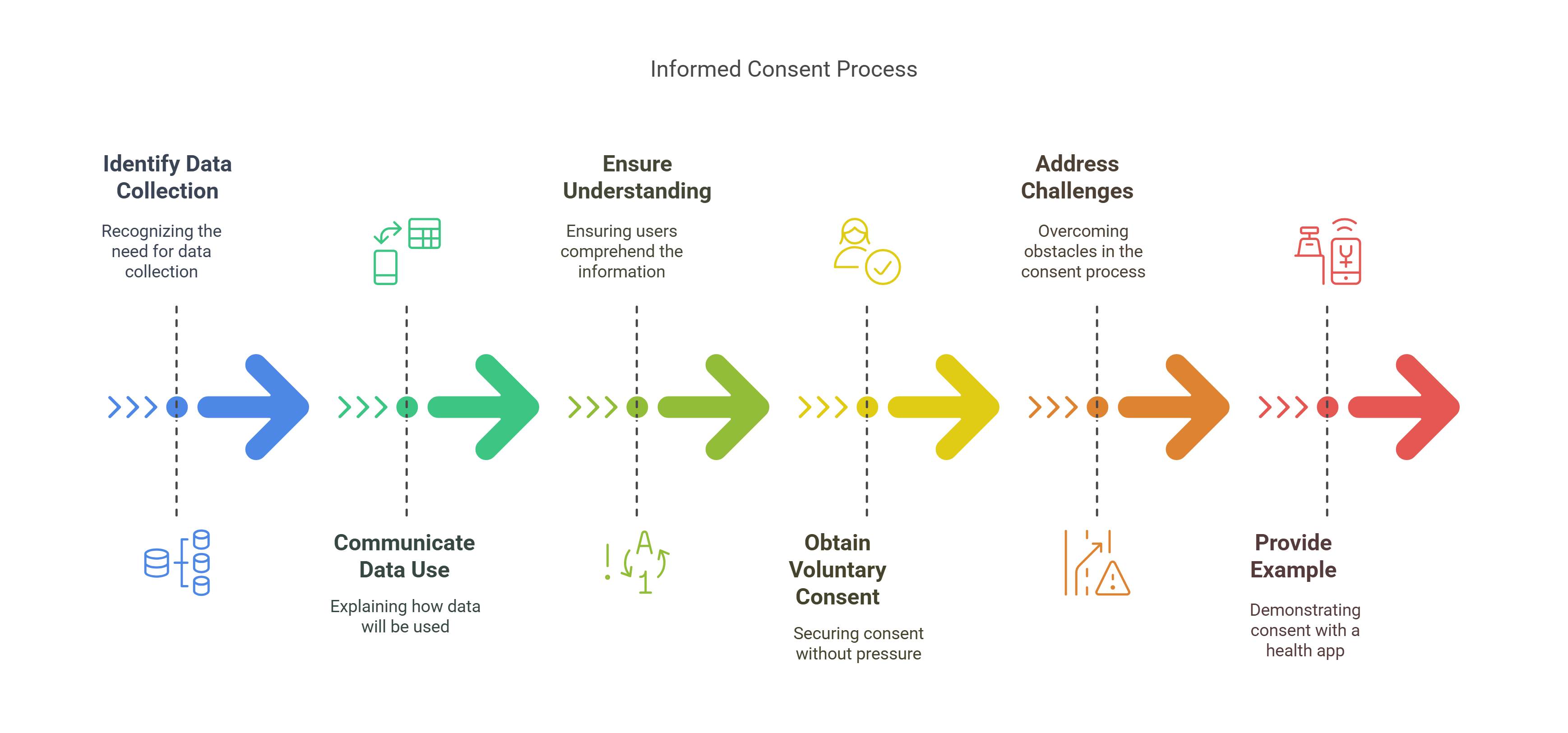

4. Informed Consent

Informed consent ensures that individuals understand and agree to the collection and use of their data. It requires clear communication of how data will be used, who will access it, and what risks are involved.

- Key Components:

- Clarity: Avoiding technical jargon so users can easily understand terms.

- Voluntariness: Consent must be given freely, without coercion or pressure.

- Specificity: Consent should be context-specific and purpose-limited.

Challenges:

- Long, complex privacy policies often discourage users from fully understanding consent agreements.

- Ensuring ongoing consent for data use over time.

Example: A health app must explicitly inform users how their medical data will be stored, analyzed, and shared.

Explained Simply: Informed consent is like asking permission before borrowing something, ensuring the owner knows exactly how it will be used.

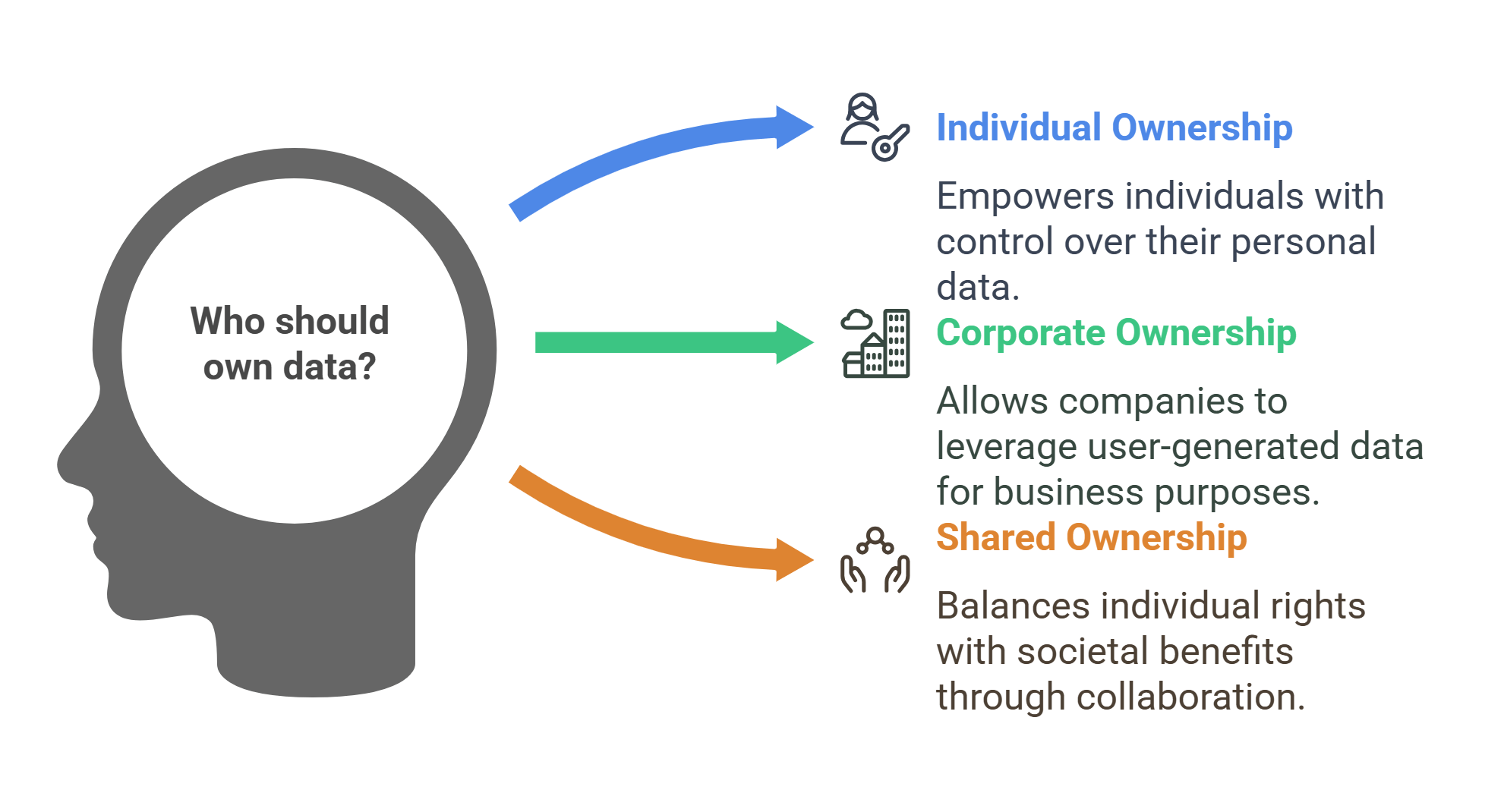

5. Data Ownership

Data ownership defines who has legal rights over data, including its collection, use, and distribution. It raises questions about whether individuals, organizations, or governments should control data and its associated value.

- Key Considerations:

- Individual Ownership: People have the right to access, correct, and delete their personal data.

- Corporate Ownership: Companies often claim rights over user-generated data (e.g., social media posts).

- Shared Ownership: Collaborative approaches that balance individual rights with societal benefits.

Implications:

- Establishing clear data ownership reduces disputes over intellectual property and privacy.

- Ambiguities can lead to misuse or exploitation of personal data.

Example: Fitness tracker companies using user-generated health data for research must clarify ownership and consent terms.

Explained Simply: Data ownership is like determining who owns the recipe for a dish made with your ingredients.

6. Ethical AI Development

Ethical AI development ensures that artificial intelligence systems are designed, implemented, and operated in ways that align with societal values, fairness, and human rights. It focuses on minimizing harm and maximizing benefits for all stakeholders.

- Core Principles:

- Fairness: Avoiding bias and ensuring equitable outcomes for diverse groups.

- Accountability: Clearly defining responsibility for AI decisions and impacts.

- Transparency: Making AI systems understandable and explainable.

- Privacy: Respecting and safeguarding user data.

Challenges:

- Balancing innovation with regulation to prevent misuse.

- Ensuring AI aligns with ethical norms in different cultural contexts.

Example: Ethical AI guidelines ensure self-driving cars prioritize safety for all road users, avoiding biased decision-making based on demographics or social factors.

Explained Simply: Ethical AI development is like teaching a powerful tool to work fairly, safely, and respectfully for everyone.

7. Anonymization of Data

Anonymization involves removing or masking personal identifiers from datasets so individuals cannot be easily identified. It is a critical practice for protecting privacy while allowing data to be used for research and analysis.

- Key Techniques:

- Data Masking: Replacing sensitive information with pseudonyms or random values.

- Aggregation: Summarizing data to eliminate individual identifiers (e.g., reporting average incomes).

- Generalization: Reducing specificity in data (e.g., using age ranges instead of exact ages).

Challenges:

- Reidentification risks arise when anonymized data is combined with other datasets.

- Balancing anonymization with data usability for meaningful insights.

Example: Health researchers use anonymized patient data to study disease patterns without exposing personal details.

Explained Simply: Anonymization is like blurring faces in a photo, protecting identities while keeping the bigger picture visible.

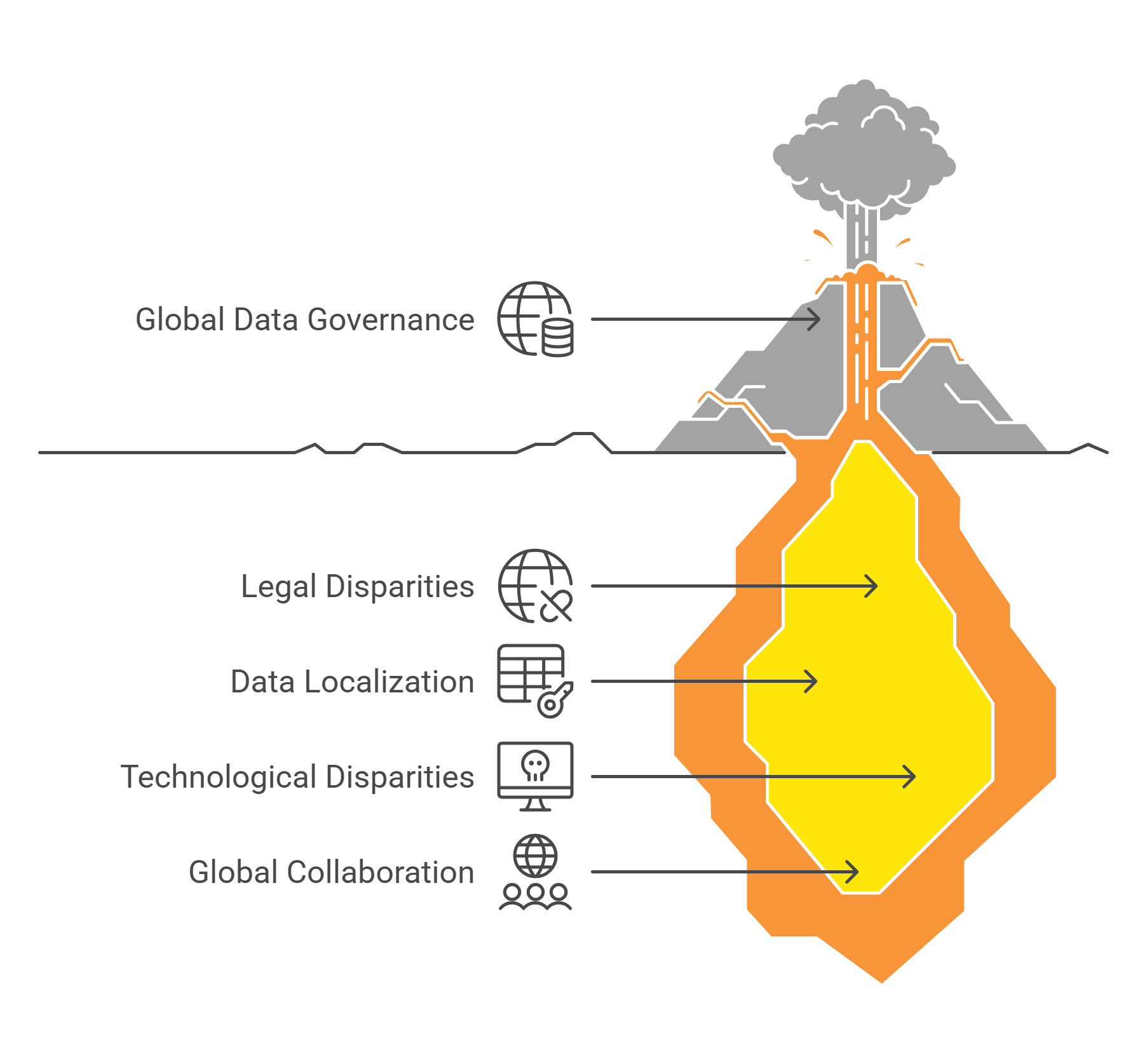

8. Global Data Governance

Global data governance refers to the frameworks, policies, and agreements that regulate how data is collected, stored, and shared across international borders. It seeks to balance national sovereignty, individual rights, and global collaboration.

- Key Challenges:

- Differing national laws, such as the EU’s GDPR versus weaker privacy protections in other regions.

- Conflicts between data localization requirements and global data flows.

- Addressing disparities in technological capabilities between developed and developing nations.

- Efforts to Harmonize Governance:

- OECD Guidelines on Privacy: Aims to standardize data protection principles.

- Cross-Border Data Flows: Encourages agreements to facilitate trade and innovation while respecting privacy.

Example: Disputes over cross-border data transfers between the EU and the U.S. highlight the complexities of aligning global data standards.

Explained Simply: Global data governance is like setting universal traffic rules for the information superhighway.

9. Responsible Data Usage

Responsible data usage ensures that data is handled ethically, transparently, and for legitimate purposes. It prioritizes minimizing harm, respecting privacy, and maximizing societal benefits.

- Core Principles:

- Purpose Limitation: Data should only be used for the purpose for which it was collected.

- Minimization: Collecting only the data necessary for achieving specific goals.

- Inclusivity: Ensuring data practices benefit all stakeholders, not just a privileged few.

Applications:

- Companies using customer data to improve services without exploiting or misleading users.

- Governments leveraging data for public health while respecting privacy rights.

Example: During the COVID-19 pandemic, responsibly using anonymized mobility data helped track virus spread while preserving individual privacy.

Explained Simply: Responsible data usage is like borrowing a tool carefully and returning it as promised—used ethically and transparently.

10. Surveillance Ethics

Surveillance ethics examines the moral implications of monitoring individuals through technologies like CCTV, online tracking, and facial recognition. It seeks to balance security, public safety, and individual privacy.

- Key Ethical Concerns:

- Consent: Ensuring individuals are aware of and agree to being monitored.

- Proportionality: Surveillance should be justified by its intended outcomes, avoiding overreach.

- Transparency: Informing the public about surveillance practices and their purposes.

Applications:

- Surveillance is used for crime prevention, public safety, and national security.

- Ethical issues arise when surveillance leads to mass data collection, racial profiling, or misuse of information.

Example: Debates over the use of facial recognition in public spaces highlight concerns about consent and potential misuse for political or social control.

Explained Simply: Surveillance ethics is like setting boundaries on how much someone can watch over your shoulder to keep it fair and justified.

✨ Conclusion

Data ethics is crucial for navigating a world increasingly driven by data and algorithms. By understanding topics like algorithmic transparency, anonymization, and surveillance ethics, readers can critically engage with RC passages and explore how ethical principles guide technology’s impact on society.